adtech

FinOps

Some time ago a discussion about CIO vs CMO as it comes to ad tech started, and as I see it, it still continues. As a technical professional in ad tech space, I followed it with interest.

As I was building ad tech in the cloud (which usually involves large scale — think many millions QPS), business naturally became quite cost-conscious. It was then when, I, meditating on the above CIO-CMO dichotomy, thought that perhaps the next thing is the CIO (or the CTO) vs — or together with — the CFO.

What if whether to commit cloud resources (and what kind of resources to commit) to a given business problem is dictated not purely by technology but by financial analysis? E.g., a report is worth it if we can accomplish it using spot instances mostly; if it goes beyond certain cost, it is not worth it. Etc.

These are all very abstract and vague thoughts, but why not?

Recently I learned of an effort that seems to more or less agree with that thought — the FinOps foundation, so I am checking it out currently.

Sounds interesting and promising so far.

And nice badge too.

A look at app-ads.txt

Introduction

App-ads.txt is a follow-up to IAB’s ads.txt initiative aimed at increasing transparency in the programmatic ad marketplace. Related to it are a number of other initiatives and standards such as supply chain, payment chain, sellers.json, various things coming out of TAG group, etc. See last section for relevant links.

TL;DR: This standard allows an ad inventory buyer (DSP) to verify whether the entity selling the inventory (SSP) is authorized by the publisher to sell it. This is done by looking up the the publisher’s URL for an app (bundle) in the app store, and examining app-ads.txt file at that URL. The file lists authorized sellers of the publisher’s inventory, along with an ID that this publisher should be identified by in the reseller’s system (publisher.id in OpenRTB).

Terminology

Here, the words “publisher” and “developer” may be used interchangeably; ditto for “app” and “bundle”.

First pass implementation

The algorithm is fairly simple: for each app – aka bundle – of interest, grab the app publisher’s domain from the appropriate app store, fetch app-ads.txt file from that domain and parse it. But of course, in theory there is no difference between theory and practice, but in practice there is. In reality, there are some deviations from the standard and exceptional cases that had to be taken care of in the process.

As a first pass, we are running this process semi-manually; if the results warrant full automation, this can easily be accomplished. Here is what was done (more technical details can be found on GitHub):

- Bundle IDs and other information was retrieved from request log for the last few days (really, requests for all bids in May as of end of May 6), using a query to Athena. This comes to a total of:

- 1,144,377 bids

- 613,241 unique bundle IDs.

- 685,221 bundle-SSP combinations

- The result set, exported as CSV file is loaded into SQLite DB. The same SQLite DB is also used for caching of results.

- Go through the list of bundles with a Python script, appadsparser.py.While ads.txt standard provides the specification of how app stores should provide publisher information, only Google Play Store currently follows it. For Apple’s App Store, we crawl its search API’s lookup service, and, if not found there, directly the App Store’s page (though this appears to be a violation of robots.txt).

- The result is a semi-structured log. The summary is below.

Results Notes

Result codes

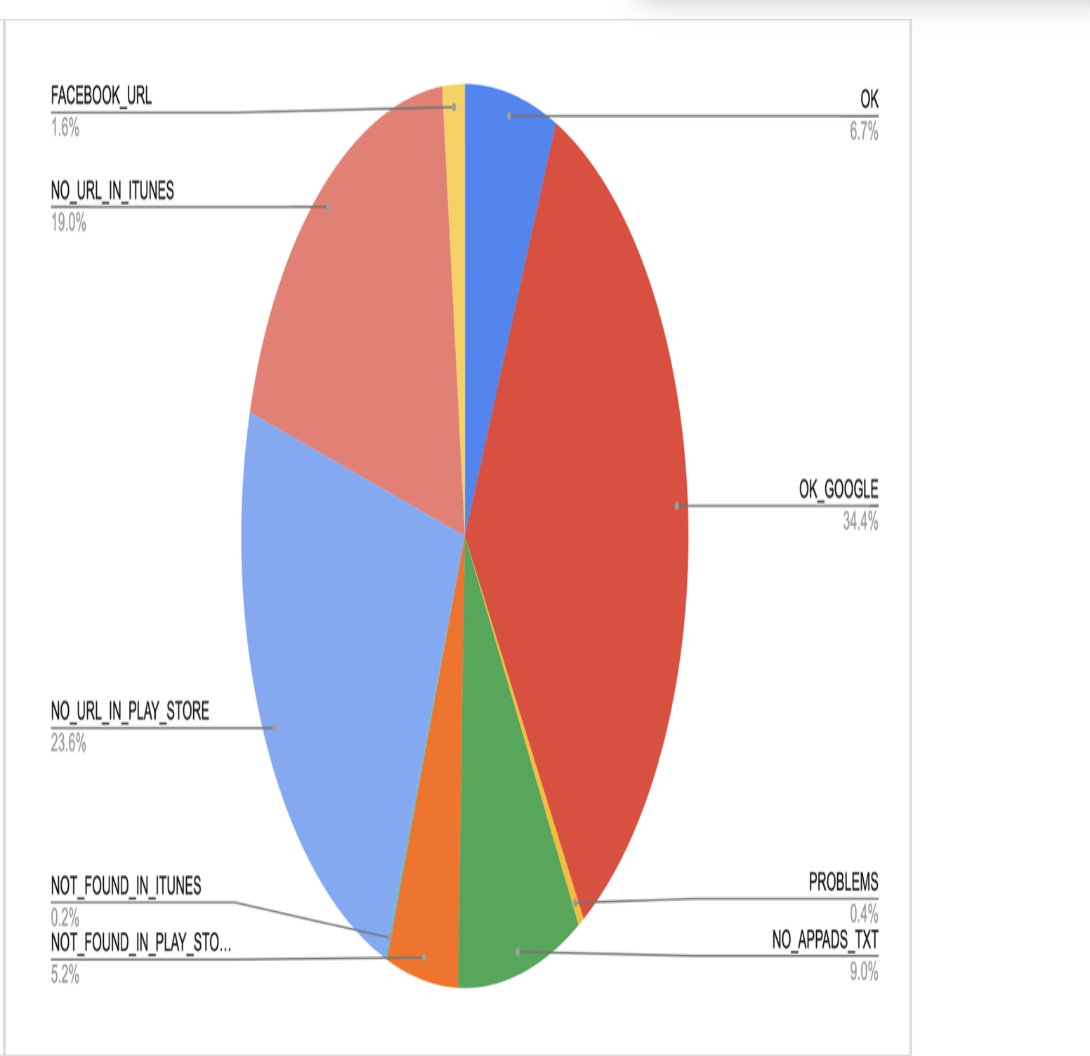

The following are result codes and their meaning:

- OK – The SSP we got the traffic on is authorized by the bundle publisher’s app-ads.txt for the publisher ID specified in request

- OK_GOOGLE – Google is authorized by the bundle publisher’s app-ads.txt. See below on explanation of why Google is special.

- PROBLEMS — Mismatch found between authorized SSPs and/or publisher IDs in app-ads.txt.

- NO_APPADS_TXT – Publisher’s website has no app-ads.txt file

- NOT_FOUND_IN_PLAY_STORE – bundle not found in Google Play Store. NOT_FOUND_IN_ITUNES – same for Apple App Store.

- NO_URL_IN_PLAY_STORE – cannot find publisher’s URL in Google Play Store. NO_URL_IN_ITUNES – same for Apple App Store.

- FACEBOOK_URL – publisher’s URL points to a Facebook page (this happens often enough that it warrants its own status) BAD_DEV_URL – publisher’s URL in an app store is invalid

- BAD_BUNDLE_ID – store URL (from the OpenRTB request) is invalid, and cannot be determined from the bundle ID either

Note on Google

NOTE: At the moment, there is no way to check the publisher ID for Google due to an internal issue. In other words, we cannot verify the following part of the spec:

Field #2 - Publisher’s Account ID - This must contain the same value used in transactions (i.e. OpenRTB bid requests) in the field specified by the SSP/exchange. Typically, in OpenRTB, this is publisher.id.

Given Google’s aggressive anti-fraud enforcement, we can for now stipulate that it would not run unauthorized inventory. There is still the possibility of fraud, of course. But in the below table we distinguish between bundles served via Google (where we do not check for publisher ID, just the presence of Google in app-ads.txt) and those served via other SSPs, where we cross-reference the publisher ID.

As a corollary of the above you will not see Google inventory under “PROBLEMS” status.

This gives a good sample:

Result code Count OK 5,693 OK_GOOGLE 29,330 PROBLEMS 361 NO_APPADS_TXT 7,653 NOT_FOUND_IN_PLAY_STORE 4,417 NOT_FOUND_IN_ITUNES 134 NO_URL_IN_PLAY_STORE 20,112 NO_URL_IN_ITUNES 16,168 FACEBOOK_URL 1,378 BAD_DEV_URL 4

Summary of findings

It appears that due to not very high adoption of this standard at present (developer URLs not present in App Stores or app-ads.txt file not present on the developer domain), there is not much utility to it at the moment. However, as the adoption rate is increasing (see below), this is worth revisiting again. Also, consider that for this exercise we only used bids information – that is, not the sample of full traffic, but just what we have bid on. This may not be representative of the entire traffic also, and may be interesting to explore.

Consider also that the developer may well have the app-ads.txt file on the website, but if the website is not properly listed in the app store, we have no way of getting to it (yet SSPs may include those sites in their overall numbers, see, e.g., MoPub below).

What does the industry say?

- Google Play Store is reported to have only ~8% adoption. Worth quoting here is this section of the report:

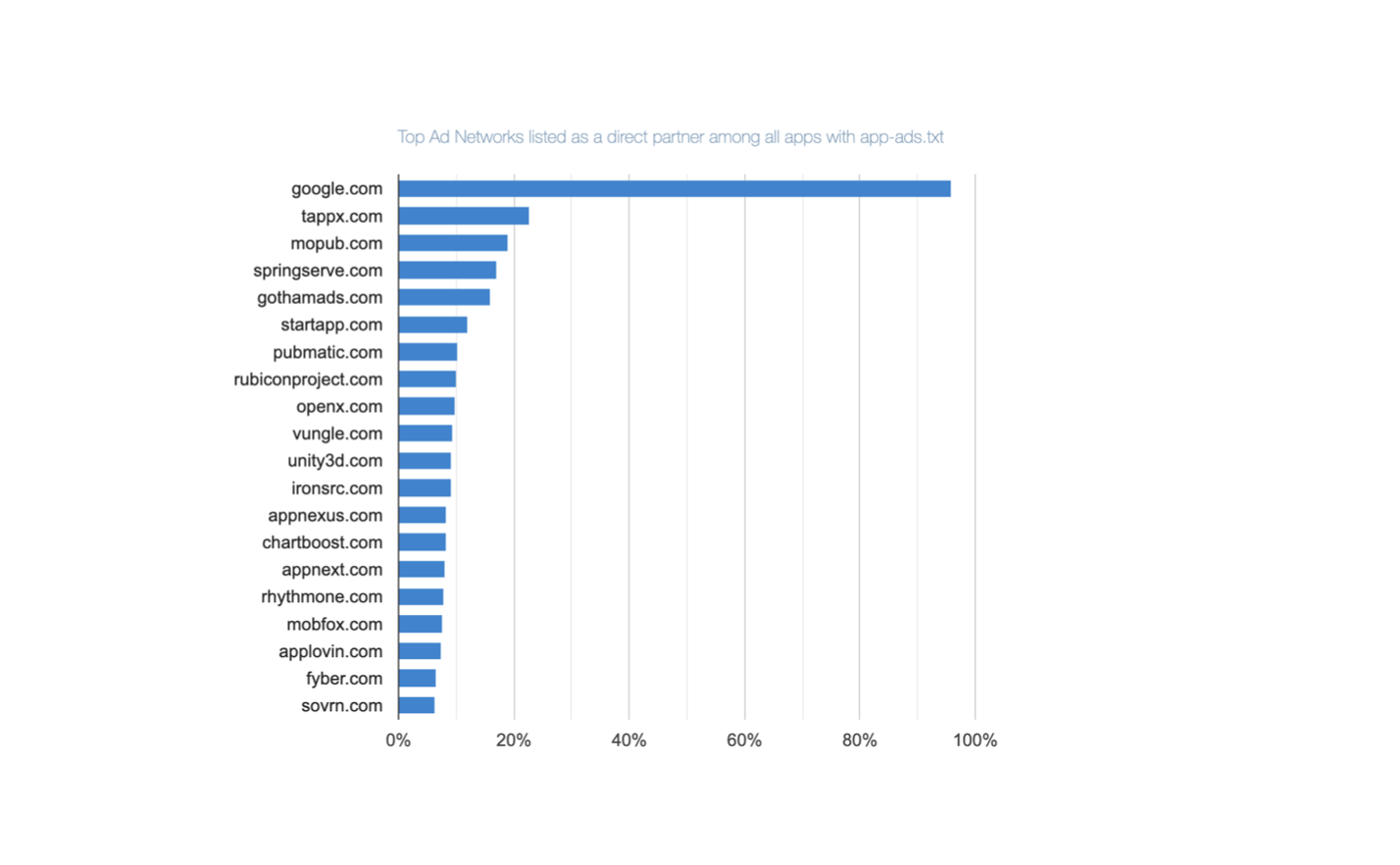

Who Are the Top ‘Direct’ Ad Partners Inside the App-Ads.txt in Google Play Apps?

Direct ad partners are those which have been granted direct permission by app developers to sell app ad space. That is, they are explicitly listed on the app’s “App-ads.txt” file. Google.com is listed as a direct ad partner on 95.87% of all “App-Ads.txt” files for Google Play apps. This makes it the most frequently mentioned direct ad partner for apps available on the Google Play.

- Pixalate’s 2019 app-ads.txt trends report is interesting:

- Doesn’t seem that app-ads.txt makes that much difference for IVT (invalid traffic) – apps with app-ads.txt have 18.7% of IVT vs 21.1% for those without (pg. 6), despite Pixalate dramatizing this 2.4 percentage point increase as a 13% increase (2.4/18.7 – lies, damn lies and statistics).

- It lists way higher numbers of adoption for Google Play Store apps than above but that is across top 1K apps.

- Increasing rates of adoption (~65% rise in Q4 2019 – pg.13)

- Unity, Ironsource, MoPub, Applovin, Chartboost – in that order – are in the top direct ad partners for Android apps (pg.18); MoPub, Unity, IronSource – in top direct AND resellers for iOS (pg.20)

- MoPub claims that “app-ads.txt file adoption exceeds 80% for managed MoPub publishers”. It’s unclear what the qualifier “managed” means. Sampling our data, we have issues with app-ads.txt on MoPub about 48% in total, breaking down as follows:

- No app-ads.txt: 11%

- No developer URL found in store: 22.5%

- Not found in app store: 13.7%

Not found?

Additionally, it is worth looking at the bundles that are flagged as NOT_FOUND_IN_PLAY_STORE or NOT_FOUND_IN_ITUNES – how come those cannot be found?

This does not have to be something nefarious, for example, based on spot-checking, it can be due to:

- Case-sensitivity. Play Store bundles are case-sensitive but an SSP may normalize them to lower case when sending (e.g., com.GMA.Ball.Sort.Puzzle becomes com.gma.ball.sort.puzzle)

- Some mishap like non-existent com.rlayr.girly_m_art_backgrounds being sent (but com.instaforall.girly_m_wallpapers exists)

But even if the majority of the NOT_FOUND errors are due to such discrepancies, not fraud, it means that currently app-ads.txt mechanism itself is de facto not very reliable.

Some other results

-

- Q: Are there any apps that present as different publishers on the same SSP?

-

- A: Not too many (about 8.6%), but even so for most important apps it is at most 2-3 – and even at long tail the max is 10 publisher IDs. This, though, can still account for some app-ads.txt PROBLEMs as seen above)

Related documents, standards and initiatives

-

- App-ads.txt spec (which builds on ads.txt spec). Real life example – app-ads.txt on CNN’s site.

- Supply chain object

- Payment chain

- Sellers.json spec and related page

- Sellers.json and supply chain FAQ

- Pixalate – 2019 App Ads.txt Trends Report.pdf

- Google Play Store is reported to have only ~8% adoption. Worth quoting here is this section of the report:

OpenDSP’s DMP: Nanoput

Here I will describe the Nanoput project, which comprises a large part of OpenDSP’s DMP (Data Management Platform). There are, of course, other pieces — the entire picture will be painted under the DMP tag.

Overall DMP stack is as follows:

- Nanoput proper: NGINX with Lua to handle . I’ll confess that at the moment this is a bit of a pet (as in not cattle). We are considering using OpenResty instead of rolling our own, which uses parts of OpenResty. But no matter, here I will present some features that can be achieved with this setup — and one instance is capable of handling all this.

- Redis for storing and manipulating user sets — ZSET is great

- MySQL for storing metadata — will be described in a separate post

- PHP/JS for a simple Web interface to define the said metadata

- Python for translating metadata into the configuration for NGINX

- AWS S3 for storing raw logs — pre-partitioned so that EMR can be used easily.

Conceptual introduction

Conceptually, let’s consider the idea of an “event”. An impression, a conversion, a video tracking event, a site visit, etc, is an event — anything that fires a request to our Nanoput is. You may recognize a similarity with Snowplow — and that is because we are solving a similar problem.

To proceed further, I must ask of a little indulgence — please bear with me. As a technologist, it really grated me to hear the words like “Javascript pixel” but I have learned to stop worrying and love the bomb. Therefore, Javascript pixel it is when it is a JS code that fires some GET URLs. Now, then, the requests are assumed to be fired as GET HTTP requests (do not have to be but that’s the primary idea behind Nanoput per se — management of metadata to ingest things via other means, like FTP upload, etc, will be addressed separately) and can originate, for example, from:

- Exchanges or DMPs, as an exchange-initiated cookie-sync: see below.

- Regular user behavior — impressions, in case of video, video-tracking events; conversions

- Calls as if initiated by exchanges or DMPs to Nanoput but in reality are, heh, Javascript pixels

Now, let us also consider the idea of a “user segment”. If you think about it, a segment is just a set of users. Thus, we may as well consider a user that produced a certain event as belonging to some segment. These may be explicitly asked for, such as “users we want to retarget“, or “users who converted”, etc. But there is no reason why any event cannot be defined as a segment-defining one.

Segments, here, are a special case of data collections concept discussed in a different post.

Given that, we can now dive into Nanoput implementation

General data acquisition idea

Here, we simply leverage basic NGINX functionality, that is logging. To that end, we split the main config file into sections that we include that deal with log format and location and behavior.

Static data acquisition URLs

By “static”, here we mean common use cases that are just part of Nanoput (hence the “man” subdirectory you will notice in examples — stands for, just like the Unix man command, for “manual”). Here we have:

- Site events (essentially, those are an extension of retargeting concept).

- Standard event tracking — by which we mean, standard events that happen in Ad world.

Notice that we also augment information available from NGINX (HTTP headers, etc.) with geo data using GeoIP module and user-agent/device/OS

Dynamic (metadata-driven) data acquisition URLs

Dynamic data acquisition works simply: a process reads the metadata table and creates appropriate entries in the NGINX configs that define log format and location and behavior.

Creating “segments”

On every “event”, consider script. We use awesome Redis’s Sorted Set functionality here inserting things twice. The key idea here, again, is a variation on dealing with data gravity concerns by just duplicating storage. We create two sorted sets for each key, the “score” being the first and last time we have seen the user. The reasoning for this is that:

- First-seen: we can write batch scripts to get rid of users we have last seen over X days ago (expiring targeting).

- Last-seen: helps us with conversion attribution (yes, this assumes naive last-click attribution or variants.

Duplication is not just for every user — it is for every set. The key here is the set (or segment) name, and the value is the set of users.

An added benefit of this is that new segments can be created by using various Redis set operations (union, intersection) easily.

Some useful shortcuts for a DMP

- Getting OS/browser info without necessarily using WURFL (though that can easily be fronted by NGINX too, actually).

Exchange cookie sync

In the display world, there is a need for cookie syncing between DSP and a third-party DMP or an exchange/SSP, and that can be either exchange, DMP or DSP-initiated, or both. Some exchanges may allow the redirect chain to proceed further, some may not. Nanoput provides this functionality for exchanges we deal with as well as a template for doing it for other partners — at the speed that NGINX provides. Here are the moving parts:

- On a partner (SSP/exchange or DMP)-initiated sync, a /man/xchin URL calls xchin.lua script that, depending on known partner policy, responds with a proper redirect (coded specifically to ensure maximum redirects). You may notice that this could be initiated by the DSP itself and you would not be wrong.

- DSP itself may initiate a cookie sync, and this is controlled by xchout.lua script.

Storing for further analysis

Raw logs, formatted as above, are uploaded to S3. Notice that they are stored twice, with different partitioning schema. This is one of the key ideas in Nanoput — storage is cheap; duplicating the storage this way and then using one or another partitioning schema depending on the use case:

- Partitioned by date — useful for most internally-provided reporting

- Partitioned by user — here, it’s important to see that it’s a multi-tenant system; in this context, “user” is a client/customer. This partitioning is useful to provide customers with ability to run their own custom queries. See also notes on data duplication and data gravity.

Cookies coming home to Proust

Ok, I couldn’t resist the title, and also too, Billy Collins’ line about “No cookie nibbled by a French novelist could send one into the past more suddenly” is just awesome so why not… But I digress…

At OpenDSP, we, obviously, cookie (yes, it is a verb — to cookie) users. While more details can be had in other posts (such as Nanoput, and more under DMP tag), here I’ll just address the format issue.

The cookies we use are known as mod_uid cookies because of the Apache project. (A good writeup is done here). Because we use NGINX, and, consequently, use its uid_set/uid_get functionality, one may be puzzled when reconciling the cookie as seen sent to the user and what NGINX writes in its logs. So here

- Python: convert cookie to hex

- Python: convert hex to cookie

- Go: convert cookie to hex — if you are wondering why Go, Go’s key feature of compiling to a standalone binary — which can then be used easily anywhere — is why.